Big-O is pretty important. It is the metric that is used to describe the efficiency of algorithms. Having a thorough understanding of Big-O is crucial for ensuring that algorithms are as efficient as possible. Let’s learn more about this fundamental computer science topic.

What is Big-O?

Imagine that there is a ship builder. Her name is Andrea. She makes big ships and small ships for a port city. She can make the small ships pretty quickly, but the bigger ships take longer. The amount of time that it takes her to build a ship is proportional to the size of the ship.

Andrea is also a pirate. She is known to steal other peoples’ ships. She usually does her pirating in a port town that is 10 days worth of travel by land and 5 days of travel back with the stolen ship, so 15 days round trip. The port town that she pirates from always has the ship size that she is looking for.

If a customer asks Andrea to build a ship, she has two options. She can either build the ship or she could put on her pirate hat and go steal a ship.

So if someone wants Andrea to build a ship for them, which option should Andrea choose? That’s right! It depends.

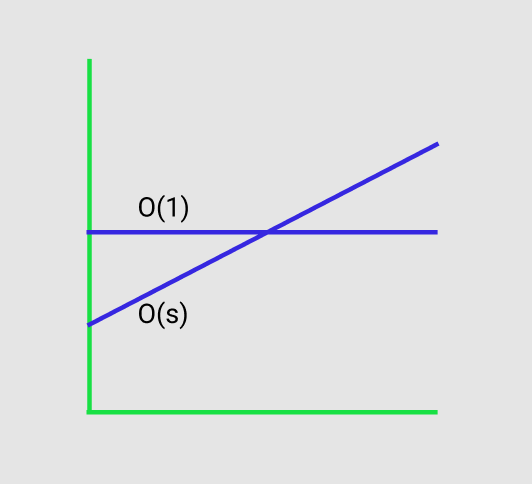

- Build the Ship: O(s): where s is the size of the ship. This is linear time. The time that it takes to build the ship increases linearly depending on the size of the ship.

- Steal the Ship: O(1): this is constant time. It doesn’t matter how big or small the ship is. It will always take Andrea 15 days to get the ship back to the customer.

So Andrea should build the ship if she can get it done in less than 15 days. If the ship is big enough that it would take longer than 15 days to build, then Andrea should go steal the ship.

On a side note, this blog does not condone stealing and Andrea should really rethink her life of crime.

Here is a graph that represents the relationship between linear O(s) and constant O(1) time:

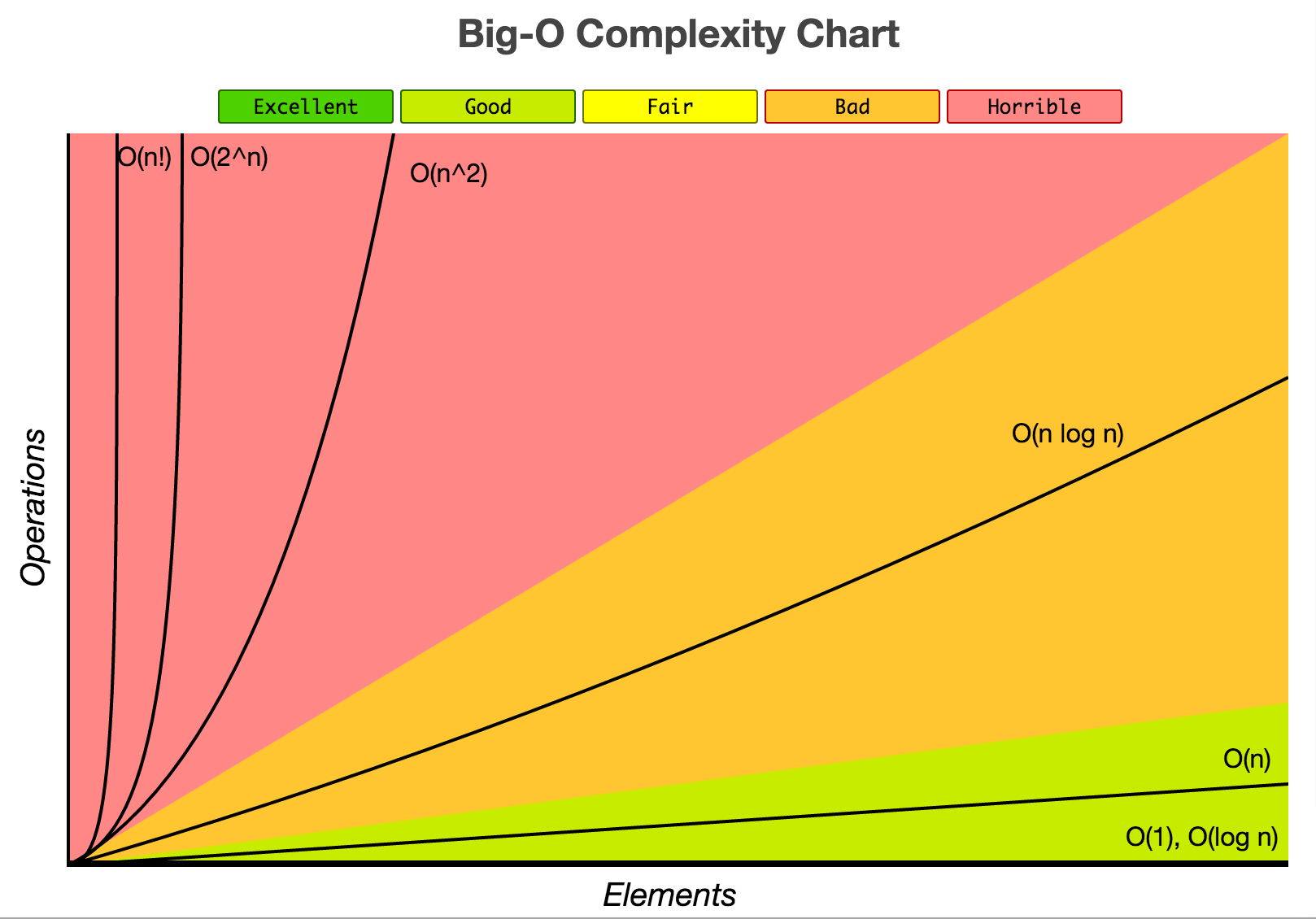

There are many more possible runtimes that can occur besides linear and constant. Here is a graph that shows some of the more commonly-used runtimes:

The above complexity chart comes from the Big-O Cheatsheet website. Definitely a useful resource!

The Three Cases

Let’s use quick sort as an example. Quick sort picks a random element as a pivot and then swaps the values so that the elements less than the pivot appear before the elements that are greater than the pivot. Then it uses recursion to further sort the left and right sides. Learn more about quick sort here.

- Best Case: The best case means that the algorithm is given the most ideal data. If all elements are equal in the array then quick sort will just have to traverse the array once. Traversing an array of N elements will give a runtime of O(N).

- Worst Case: The worst case means that the algorithm is given the least ideal data. With quick sort, it could happen that the pivot is repeatedly the biggest element in the array. If this were to happen then instead of the subarray being recursively divided in half each time, the subbarray would only be reduced by one element. This would give a runtime of O(N 2).

- Expected Case: Typically instead of having a worst case or a best case you are more likely to have an expected case. Sometimes the pivot will be high and sometimes the pivot will be low, but over time they will average each other out. This will give a runtime more close to O(N log N).

The best case runtime usually isn’t of too much interest when analyzing an algorithm. The worst and expected cases are the ones that need to be considered.

Space Complexity

Space complexity is used to describe the total amount of memory that an algorithm uses in respect to the input size of the algorithm.

If an algorithm requires an array of size n, this will require O(n) space. If there is a two dimensional array of size n by n, then O(n2) space is required.

Some Useful Big-O Tips

There are a couple of tips that you should keep in the back of your mind when you are working on finding the Big-O. Here they are:

- No constants

- No non-dominant terms

- Consider multiple runtimes

No Constants

With Big-O time the constants are not taken into consideration.

Big-O notation doesn’t care about constants because Big-O notation only describes the long-term growth rate of functions, rather than their absolute magnitudes.”

An algorithm that might seem to be O(2N) is actually only O(N). Here is a Stack Overflow post that does a pretty good job of describing it.

Take a look at some code:

The runtime is O(array.length) or O(N).

Let’s take look at some more code:

Your first intuition might to be assume that because there are two for loops that iterate the length of the array that the runtime must be O(2N). Don’t fall into this trap! The runtime is O(N). Remember to drop the constant!

No Non-Dominant Terms

What if you get a runtime like O(N 2 + N)? What should we do then? Well, if you were able to deduce that we should drop the non-dominant term, then congratulations!

Consider the runtime O(N 2 + N 2). This is the same as O(2N 2). We know that we should not include constants in our runtimes. So if one of the N 2 terms is ignored then we can ignore the N in O(N 2 + N) as well.

- O(N 2 + N) becomes O(N 2).

- O(N + logN) becomes O(N).

- O(5*2N + 1000N100) becomes O(2N).

Consider Multiple Runtimes

If we had to iterate through two arrays of the same length N then we could say that the runtime was O(N) (remember to drop constants!). However what happens if the arrays are of different lengths?

Take a look at some code:

We are iterating through two arrays of different lengths. The runtime is O(A + B). Both runtime lengths must be considered!

Let’s take a look at some more code:

If the arrays were of equal length we could say that the runtime was O(N 2). However, the arrays are different lengths. This means that for every element of A, arrB will be iterated through. This results in a runtime of

O(A * B).

Thanks!

Thanks for reading my post. I hope that you found it useful. Once you understand the material presented here, be sure to continue your learning about Big-O.

Here are some topics that you should explore next:

Thanks again!

Good stuff! In “Consider Multiple Runtimes”, is there a typo on 2nd for loop?

Thanks! And good catch!